I have seen firsthand how digital transformation is sparking growth in businesses worldwide. Much of this is owed to neural models and ever-improving data processing. For over sixteen years, my work—now crystallized in my project, Adriano Junior—has centered on building digital solutions powered by intelligent models that solve tangible business problems, whether it's smarter sales predictions or fully automated workflows. In the following guide, I want to explain, in practical and human terms, what deep learning is, how it works, and where your company can actually expect results.

Introduction: Why neural architectures are everywhere

When people look around and see AI translating, recognizing faces, reading documents, or talking to customers, it always traces back to neural architectures stacked layer upon layer. These models are called "deep" because they use many processing stages between input and output.

Since the pandemic, I’ve watched more businesses ask for automation and smarter prediction, reflecting global shifts. According to the LSE Business Review, about two-thirds of firms have adopted new digital technologies after COVID-19, with 25% investing in artificial intelligence this decade. The world is shifting fast, and what was research in universities just a decade ago is now embedded in the core of digital businesses.

Yet, deep learning is not the same as old-school machine learning. I remember working in 2010, training simple systems to sort emails as spam or not, relying on human-designed logic. Today, neural models can analyze thousands of features and learn patterns impossible to hand-craft, driving outputs for vision, language, audio, and structured data.

The deeper the network, the richer its understanding.

Defining deep learning and distinguishing it from machine learning

Deep learning is a technique for automatically learning patterns and rules from data using multi-layered neural networks, which mimic some aspects of human perception. These networks transform raw data (images, text, signals) into useful representations through many processing “layers”, each picking out features and relationships of growing complexity.

Traditional machine learning, by contrast, typically uses fixed rules, linear decision boundaries, or a shallow set of transformations. If you’ve ever used a decision tree, a logistic regression, or a single-layer perceptron—those were all early forms of machine learning, but their ability to capture nuance or complexity is quite limited.

- Machine learning relies on engineered features, often built by experts.

- Deep neural networks “learn” these features themselves, end to end, given large enough datasets and computational power.

- With deep architectures, much less manual intervention is needed for domains such as vision, audio, and text.

I get asked a lot: “Does this mean the model is ‘intelligent’?” No, not in the way people are. But stacked layers can extract patterns so subtle or high-dimensional that they achieve surprising performance—sometimes surpassing humans at specific tasks.

With enough depth, a system can learn to “see” or “read” in ways earlier models never could.

The anatomy of neural networks: How layers unlock intelligence

The anatomy of neural networks: How layers unlock intelligence

Every deep neural model is, at its heart, a series of connected processing nodes called “neurons.” Each neuron receives inputs, performs a transformation (usually a weighted sum plus nonlinearity), and passes results to nodes in the next layer. With enough layers, these models start to not just recognize simple shapes or sounds, but put together highly abstract or multifaceted concepts.

Let me break down the typical architecture:

- Input layer. Receives raw data—pixels, audio, text tokens, or numbers.

- Hidden layers. Each layer processes the previous one’s signals, forming increasingly abstract features. This is where “depth” comes in—a deep network may use dozens or even hundreds of layers.

- Output layer. Produces predictions or labels—classifying an image, generating a text, or forecasting a trend.

Deep models are defined by having many hidden layers—making them “deep” rather than “shallow.” Each additional layer enables the model to capture more complex patterns, interactions, and relationships in the data.

The architecture becomes more specialized depending on the problem. Let’s look closer at the most popular types.

Main types of deep neural architectures

Main types of deep neural architectures

Over the years, various neural network structures have evolved for different types of data and problems. Here’s how I usually approach which to use:

- Convolutional networks (CNNs), for images, video, and spatial data

- Recurrent networks (RNNs), for sequences, like text or audio

- Generative adversarial networks (GANs), for generating new data, such as images or audio

- Transformer models, for tasks with context, like language, long documents, or code

- Autoencoders, for unsupervised representation learning and anomaly detection

Research like the 2017 Brunel University review compared autoencoders, CNNs, deep belief networks and restricted Boltzmann machines across tasks like speech and pattern recognition, showing the versatile uses of each structure.

The right architecture can turn raw input into actionable knowledge.

Convolutional neural networks: Giving vision to machines

Back when I built my first image classifier, I hand-designed features—edges, colors, textures—and passed those to shallow models. CNNs turned this entire process upside down. Convolutional networks automatically "see" visual features, like lines, shapes, and objects, by sliding small filters across the input image.

- Early layers detect edges or blobs.

- Deeper layers assemble these into textures, shapes, and whole objects.

- CNNs also incorporate pooling, which makes the network more robust to image shifts and changes.

Because of their architecture, convolutional networks are used in:

- Image recognition and classification (e.g., recognizing cats vs. dogs)

- Medical imaging, finding tumors or anomalies in X-rays or MRIs

- Automated product inspection in manufacturing

- Facial recognition in security and social media

- Self-driving car vision systems

Convolutional layers notice what people do not.

In practice with Adriano Junior and similar projects, I often use CNNs for rapid prototyping of inspection systems. They reduce error rates and deliver outputs in milliseconds, enabling everything from real-time quality control to facial analytics for customer engagement.

Recurrent neural networks: Understanding sequences and memory

Many real-world problems require context: analyzing a sentence word by word, predicting stock prices day by day, or interpreting an audio signal over time. Recurrent neural networks add feedback loops, letting the network "remember" information from earlier steps in the sequence.

Standard RNNs face the so-called vanishing gradient problem—longer-term context fades. So, engineers developed more advanced recurrent forms:

- LSTM (Long Short-Term Memory) units

- GRU (Gated Recurrent Units)

These let RNNs capture context over dozens, even hundreds, of time steps.

- Used in text generation, speech recognition, translation

- Analyzing financial or sensor time series

- Extracting meaning from long customer interactions

- Forecasting demand or product trends

RNNs help machines "listen" over time.

From building chatbots to smart notification systems, I’ve found RNNs and especially LSTMs effective whenever the past matters—like understanding customer sentiment across a conversation or generating accurate, personalized replies.

Generative adversarial networks: Teaching creativity to computers

GANs were a turning point for synthetic data, image creation, and creative AI tasks. Generative adversarial networks pit two networks against each other—a generator, trying to create realistic data, and a discriminator, trying to spot fake from real. Over time, both improve, until the generator produces highly convincing fake data.

- Generating new images, video, or audio from existing datasets

- Photo enhancement and restoration (colorizing black-and-white images, upscaling resolution)

- Data augmentation—creating more examples for training when data is scarce

- Design and artwork assistance

It sounds a bit like magic—at first, the generator’s images are nonsense. But by learning from the discriminator's feedback, it begins producing realistic output. For business, GANs lower entry barriers for realistic visual content, simulations, and testing, especially where real data collection is costly.

It sounds a bit like magic—at first, the generator’s images are nonsense. But by learning from the discriminator's feedback, it begins producing realistic output. For business, GANs lower entry barriers for realistic visual content, simulations, and testing, especially where real data collection is costly.

GANs create data where none existed.

Transformers: A new foundation for language and large-scale context

If you’ve read about “large language models,” transformers are behind it. Transformers changed the game by learning context, relationships, and attention without needing recursion (like RNNs).

Transformers use attention mechanisms to weigh which parts of the input matter most for each output," making them especially good for encoding long-range dependencies in text or code.

- Language translation (comparing whole sentences, not just words)

- Question answering, chatbots, and search

- Summarizing long documents

- Text-to-speech and speech-to-text conversion

- Protein folding and code completion

Transformers read “the whole book,” not just a page at a time.

For any business that handles documents, customer queries, contracts, or large volumes of content, transformer-based models bring automation to classification, extraction, or even translation, making processes much faster and more scalable.

Specialized architectures: Autoencoders, deep belief networks, and beyond

Sometimes, the challenge isn’t labeling, but discovering structure, reducing noise, or finding anomalies. Autoencoders condense input data into a smaller “bottleneck” and reconstruct it, forcing the model to focus on what’s truly important. This helps with compression, anomaly detection, and representation learning.

- Fault detection in manufacturing

- Fraud detection in finance

- Reducing file sizes and noise in signals

- Unsupervised feature discovery to improve downstream tasks

Other variants, like deep belief networks or restricted Boltzmann machines, specialize in feature discovery, generative modeling, or large-scale unsupervised learning. The Brunel University review offers more comparative background if you’re curious about these less common architectures.

Compression reveals the core of the data.

How neural networks learn: Training, backpropagation, and data needs

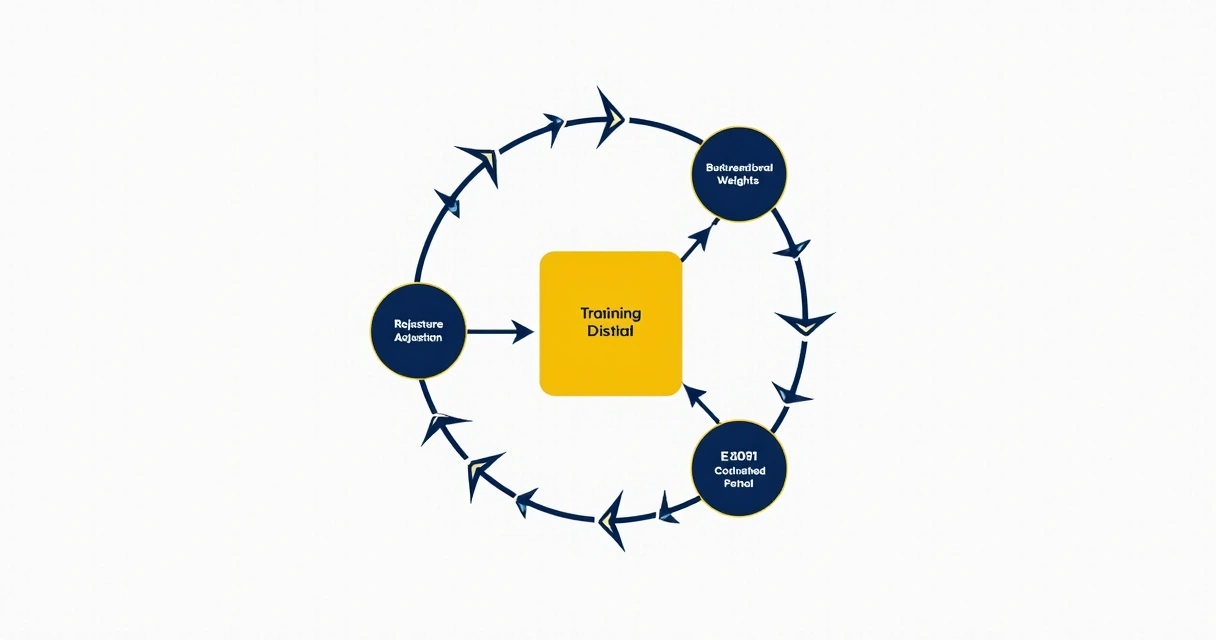

People often ask, “How does the model know what to do?” The answer is: training. The network starts off with random “weights”—the strength of connections between neurons—and learns the right settings by seeing labeled examples and adjusting until its outputs match reality as closely as possible.

The standard process goes like this:

- Feed training data (inputs and correct outputs) through the network.

- The network produces a prediction; errors are measured by a loss function (how far the prediction is from the correct answer).

- Backpropagation is used to “blame” each neuron for a share of the error, passing gradients backward through the network.

- Weights are adjusted to reduce future error.

- Steps 1-4 repeat for many “epochs”, gradually reducing the average error.

Backpropagation makes layer-wise learning efficient by using the chain rule from calculus—each parameter gets updated in proportion to its role in the output error.

I see a lot of companies underestimate one thing: data. While basic models might learn from a few hundred or thousand samples, modern deep networks thrive on tens or hundreds of thousands, sometimes millions, of labeled examples.

- Image tasks with CNNs: ideally ~10,000+ well-labeled images per class

- Speech, translation, and language: millions of examples for top results

- Financial or sensor prediction: as much historical and contextual data as feasible

Common techniques in training

Common techniques in training

- Gradient descent variants: Algorithms for updating weights, like stochastic, mini-batch, or Adam optimizers

- Regularization: Dropout, weight decay, early stopping to reduce overfitting on training data

- Data augmentation: Artificially increase dataset size by slightly changing (rotating, cropping, changing color) the training images or text

- Transfer learning: Start from models trained on big, generic datasets and fine-tune on specific business data

With these tools, even companies with moderate datasets can get value—by borrowing from well-established “pre-trained” weights, then tuning with their unique examples. In my daily work, this strategy consistently delivers real, measurable improvements with practical budgets and timelines.

The right data beats the fanciest algorithm.

From lab to business: Practical applications driving digital transformation

The consequences of better recognition, prediction, and automation are everywhere. According to The Productivity Institute, nearly 50% of UK businesses by 2025 are integrating AI into core operations, outpacing earlier technologies.

What does it mean, in practical terms? It means:

- Reading documents and extracting key data, automating workflows in finance, law, and healthcare

- Flagging fraud in banking by spotting subtle behavior or transaction patterns

- Analyzing camera feeds in real time for safety, security, or customer experience

- Demand forecasting across supply chains and logistics

- Improved personalization in marketing and ecommerce—offering the right content to the right user

- Creating virtual assistants or chatbots to answer customers instantly, 24/7

- Streamlining manufacturing inspection, cutting costs and error rates

In my own career, first as a developer and now as a project architect, I often notice that deep models’ greatest business value is not in performing a single “miracle” task, but in making routine operations scalable, consistent, and fast. Sometimes companies expect fireworks, but the breakthrough is often just reliable, quiet automation.

In my own career, first as a developer and now as a project architect, I often notice that deep models’ greatest business value is not in performing a single “miracle” task, but in making routine operations scalable, consistent, and fast. Sometimes companies expect fireworks, but the breakthrough is often just reliable, quiet automation.

True AI pain relief is nearly invisible.

Case examples from the field

- Healthcare: Image-based tumor detection using CNNs, reducing false negatives in radiology

- Retail: Real-time forecast of demand spikes using RNNs, reducing overstock and missed sales

- Legal/finance: Automated contract analysis using transformer models, freeing up thousands of work-hours per year

- Manufacturing: Automated quality inspection systems powered by CNN, cutting defect rates

- Customer support: Chatbots using transformers to deliver 80%+ automation of basic client queries

Main challenges and risks in deploying neural systems

Despite the huge promise, successful projects must work through real challenges:

- Data requirements: Large, well-labeled datasets are often expensive or time-consuming to collect.

- Computational demands: Training modern networks can require days or weeks on powerful GPUs.

- Model opacity (“black box”): Decisions by deep networks are not always easy to explain or audit.

- Bias and fairness: If training data reflects biased patterns, so will the model. This is a major concern, especially for hiring, finance, or policing applications.

- Robustness: Models can be fragile—small changes to input data may cause errors if not properly tested and secured.

- Security: As shown in University of Kentucky research, neural systems used in power grids and IoT must face risks like false data injection attacks and adversarial examples.

Automation is powerful, but blind trust is risky.

I have sometimes seen companies leap in without proper validation or attention to privacy. Good practice means constant monitoring, diverse data sources, and regular updates—not just set-and-forget. Curricula like the University of York’s Intelligent Systems 3 illustrate modern ways to audit, explain, and test machine learning models for real-world trust.

Key strategies for successful adoption in business

Key strategies for successful adoption in business

Through years of building and deploying these systems across industries, I’ve found that companies gain most by focusing on factors such as:

- Start small, then scale. Begin with a pilot, using well-defined targets and KPIs. This avoids overinvestment before returns prove out.

- Focus on specific, pain-relieving use cases, not technology for technology’s sake. Processing invoices, automating support, cutting error rates—these give measurable progress.

- Invest in your data pipelines. The best models are no better than their input data. Consistent, diverse, well-labeled data trumps clever algorithms.

- Choose architecture by problem, not by hype or trend. Each application—vision, sound, text, sequence—demands the right tool.

- Monitor, explain, retrain. Deploying is just the start. Regular audits, user feedback, and retraining keep the systems reliable and fair.

- Develop internal capacity, but don’t hesitate to work with outside experts for audits or architecture design. The field moves too quickly to know it all alone.

Small, focused pilots lead to real wins, then scale.

Businesses that follow this path not only see immediate gains, but also build the muscle needed to adapt as technologies keep shifting.

The impact of deep architectures for digital transformation

Looking across sectors, the use of deep models and smart systems isn’t just about higher accuracy or speed—it is about enabling new ways for businesses to operate. Genuinely intelligent workflows automate the repetitive, highlight the unexpected, and free human minds for bigger questions. The impact extends from day-to-day efficiency to innovative business models.

Recent data, such as the LSE–CBI survey (see the summary here), confirms this trend: roughly 25% of UK companies have adopted artificial intelligence since 2020, and the pace is rising. As more software is built “intelligent-first,” the productivity gap will widen between companies automating routine learning and those stuck with traditional tools.

What about industries lagging behind?

What about industries lagging behind?

I’ve seen that companies who hesitate often do so out of fear—fear of cost, lost jobs, or technical complexity. But the new wave of “out-of-the-box” models, pretrained on open datasets, means that mid-sized businesses can often start with smaller pilots at vastly lower cost. The return on investment arrives not from replacing staff, but from scaling their expertise, catching errors earlier, and delivering value faster to the end user.

Limitations and future prospects of deep models

Even as the technology grows, there are boundaries. Deep neural networks still struggle with:

- Noisy, incomplete, or contradictory data

- Failing in ways that are hard to predict—adversarial inputs can confuse even the best models

- Explaining their decisions in ways that humans can always understand

- Trouble generalizing when the task is very different from training data

However, the field is addressing these. More interpretable models, better benchmarking, and rigorously audited pipelines are the new standard.

As I look ahead, I expect:

- Much wider use of transfer learning, shrinking the data needed for new projects

- Smarter auto-ML systems, making it simpler to build robust pipelines without deep mathematical knowledge

- Cross-modality models—able to “see” and “read” and “hear” within a single architecture

- Synthetic data for safer, cheaper model development

- Edge deployment—running advanced models on small, local hardware for privacy or speed

I see the near future as one where even smaller and more traditional businesses will gain access to smart systems—not by learning advanced math, but by working with partners like Adriano Junior that deliver tailored, understandable solutions.

I see the near future as one where even smaller and more traditional businesses will gain access to smart systems—not by learning advanced math, but by working with partners like Adriano Junior that deliver tailored, understandable solutions.

How to begin implementing deep learning in your business

If you’re considering harnessing neural models, my practical advice is:

- Identify one pain point: Something routine, repetitive, data-rich, and measurable (e.g., invoice processing, support triage, visual inspection).

- Survey your existing data: How much do you have, and is it labeled? If not, can you start capturing it now?

- Define success. What would it mean to succeed—faster turnaround, fewer errors, higher throughput?

- Engage internally and externally. Build a small team with some domain, data, and software expertise. Consider an experienced partner for design and architecture.

- Pilot, monitor, repeat. Start small, validate early, then grow scope with real-world learning.

Businesses that move methodically—focused on value, not hype—are already seeing gains. The goal is not replacing people, but enhancing what they do best.

Begin with one small step, guided by the right data and questions.

Conclusion

Conclusion

To sum up, deep neural networks—whether convolutional, recurrent, adversarial, or transformer-based—are now the secret engine behind smarter, faster, and more scalable business processes. Their architectures allow machines to see, read, and listen, often with superhuman consistency.

Yet even the best model is only as good as the care with which it is built, fed, and maintained. In my work at Adriano Junior, I’ve seen firsthand that the greatest advantage lies not in complexity or hype, but in targeted, understandable solutions that transform actual workflows. As companies continue to leap forward with digital tools, those who understand where and how to introduce these systems—supported by experienced partners—will capture the largest share of results.

If you're ready to discuss what intelligent systems can do for your business—or if you simply want to dig deeper into the possibilities—I invite you to reach out. Let’s have a conversation about your vision, your data, and the concrete results you hope to achieve. Together, we can turn today’s possibilities into tomorrow’s reality.

Frequently asked questions

What is deep learning in simple terms?

Deep learning is a type of artificial intelligence where a system learns from data by passing it through many layers of computer “neurons,” each layer discovering more complex patterns. In practice, it lets computers automatically recognize images, understand text, or make predictions without hand-coding all the specific rules. You teach the system by example, and with enough layers and data, it picks up how to perform the task on its own.

How does deep learning differ from machine learning?

While both learn from data, classic machine learning relies heavily on hand-crafted features and simpler models (like decision trees or linear regression), requiring experts to decide what is important. Deep learning, on the other hand, automatically discovers useful representations directly from raw data by stacking many layers of artificial neurons. This ability to learn end-to-end from raw images, text, or audio is what sets it apart from traditional machine learning.

What are common deep learning applications?

Some of the most widely used applications are:

- Image recognition (like facial or object recognition in photos)

- Speech recognition and voice assistants

- Machine translation and text summarization

- Medical diagnosis from X-rays, MRIs, and other imaging

- Fraud detection, anomaly detection in finance and manufacturing

- Self-driving vehicle perception

- Chatbots and virtual agents

- Supply chain and sales forecasting

In my work, I often see companies benefit the most from document automation, smart customer support, and predictive analytics.Which deep learning architectures are most popular?

The four most popular are:

- Convolutional neural networks (CNNs): best for images and video.

- Recurrent neural networks (RNNs): best for sequences or time series such as text and audio.

- Generative adversarial networks (GANs): used to generate new data, especially in creative industries.

- Transformers: now the standard for language tasks, document automation, code, and even some vision tasks.

Each architecture addresses a different type of data or business need; choosing the right one is key to actual results.Is deep learning worth learning in 2024?

Absolutely. Modern business increasingly depends on smart automation, rapid analysis, and tools able to “read” or “see” as efficiently as humans—or better. Even if you’re not an engineer, understanding the basics allows you to ask the right questions when bringing these tools into your company. The field evolves quickly, but the demand for talent and vision in applying these systems is only growing.